Silicon and IP

Run AI applications <mW by skipping zeros connections and activations. Deploy as a co-processor chip or tileable IP

Femtosense was at CES 2022 running an ultra-low-latency speech enhancement demo. A video is nice, but hearing is believing.Reach out to experience our latest version live and in-person. Visit us at CES 2023 if you are around!

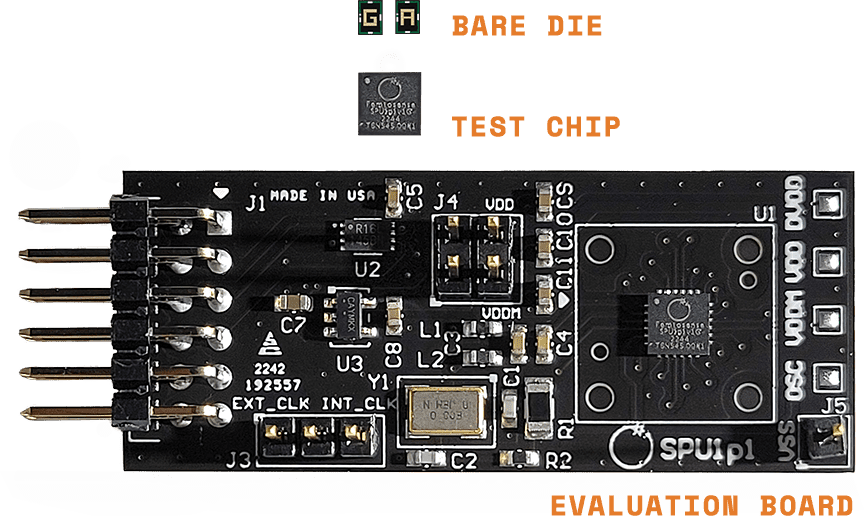

Introducing SPU-001, the world’s first dual-sparsity AI accelerator for smaller electronic devices. Bring more functionality to your products without affecting battery life or cost.

We’ve built our hardware platform to achieve the efficiency of an ASIC, while retaining the flexibility of a general purpose accelerator. The SPU is easy to program, easy to simulate, and easy to deploy, allowing engineers and product managers to get innovative, class-leading products to market quicker.

We’ve built our software development platform to help companies of all sizes deploy optimal sparse AI models for tomorrow’s applications and form factors. Our SDK contains advanced sparse model optimization tools, a custom compiler, and a fast performance simulator. It’s everything you need from exploration to deployment.

Notice

This website stores cookies on your computer. These cookies, as specified in our Privacy Policy, are used to collect information about how you interact with our website. We use this information in order to improve and customize your browsing experiences.

Use the “Accept” button to consent to the use of such technologies. Use the “Reject” button to continue without accepting.